Understanding Model Interpretability

In the era of artificial intelligence and machine learning, understanding how models make decisions has become crucial for ensuring transparency and trust. Model interpretability techniques like Partial Dependence Plots (PDP), Individual Conditional Expectation (ICE), Local Interpretable Model-agnostic Explanations (LIME), and SHapley Additive exPlanations (SHAP) provide powerful tools to peek inside the black box of complex algorithms.

The pursuit of transparent artificial intelligence has never been more critical. As organizations deploy increasingly sophisticated algorithms across high-stakes domains, the demand for model interpretability has transcended academic curiosity to become a regulatory and ethical imperative. Understanding how models make decisions is no longer optional—it's essential for building trust, ensuring fairness, and maintaining accountability in our AI-driven world.

Model interpretability addresses the fundamental challenge of making black box systems comprehensible to human stakeholders. When a loan application is denied, a medical diagnosis is suggested, or a hiring decision is influenced by algorithmic recommendations, affected parties deserve meaningful explanations. This transparency serves multiple constituencies: end-users seeking justification for decisions that impact their lives, practitioners needing to debug and improve model performance, and regulators ensuring compliance with emerging AI governance frameworks.

The interpretability landscape encompasses diverse methodological approaches, each with distinct strengths and limitations. Partial Dependence Plots reveal how individual features influence predictions across the entire dataset, while LIME provides localized explanations by approximating complex models with simpler, interpretable surrogates around specific instances. SHAP leverages game theory to assign fair attribution scores to features, and Individual Conditional Expectation curves show how feature changes affect individual predictions, complementing the averaged perspectives of PDPs.

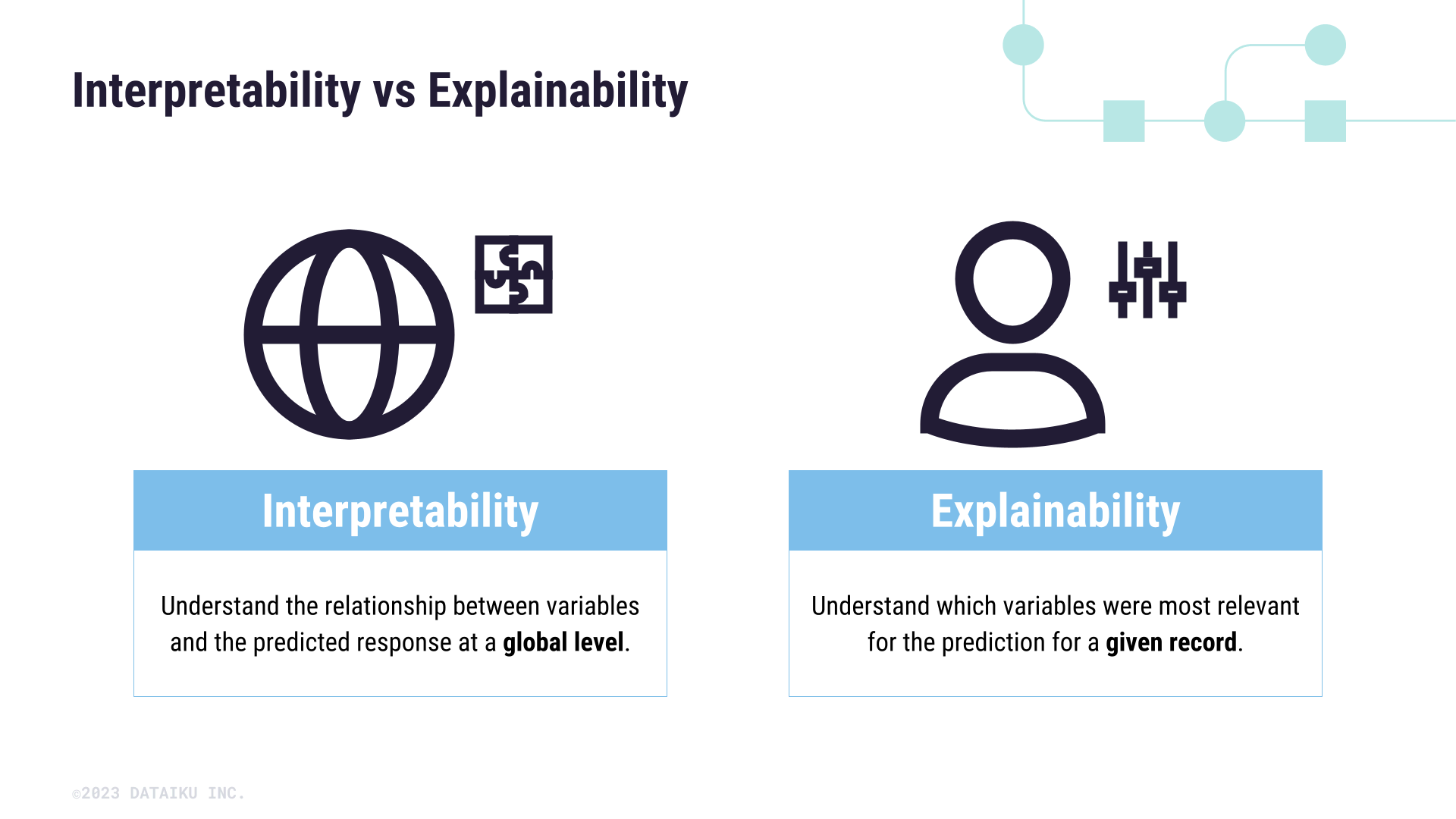

These techniques operate along a spectrum from global interpretability—understanding overall model behavior—to local interpretability—explaining specific predictions. The choice of method depends critically on context: regulatory compliance often demands consistent, theoretically grounded approaches like SHAP, while rapid prototyping might favor faster but less robust techniques like LIME. Success requires matching interpretability tools to specific stakeholder needs, model types, and deployment constraints while acknowledging that no single approach provides complete transparency into complex algorithmic decision-making.

Partial Dependence Plots (PDP)

Based on the previous chapter's discussion of model interpretability importance, Partial Dependence Plots (PDPs) emerge as a fundamental technique for understanding how individual features influence model predictions. PDPs visualize the marginal effect of one or more features by averaging the model's predictions across all possible values of other features, effectively isolating the relationship between the target feature and the predicted outcome.

The methodology involves creating a grid of values for the feature of interest, then systematically replacing that feature's value in every data point while keeping other features unchanged. The model's predictions are then averaged for each grid value, revealing how changes in the feature affect predictions on average. This approach makes PDPs model-agnostic, working equally well with tree-based models, neural networks, or any black-box algorithm.

However, PDPs carry a critical assumption: feature independence. When features are correlated—a common occurrence in real-world data—PDPs can generate misleading visualizations by forcing the model to evaluate unrealistic feature combinations. For instance, a PDP might show predictions for "zero income with luxury spending," combinations that never occur naturally. This limitation becomes particularly problematic in domains like healthcare or finance where feature correlations are strong and interpretability errors have serious consequences.

Modern practitioners increasingly supplement PDPs with Accumulated Local Effects (ALE) plots for correlated features and uncertainty quantification techniques to provide confidence intervals around PDP estimates, addressing the statistical reliability concerns that have emerged in recent research.

Individual Conditional Expectation (ICE)

While Partial Dependence Plots reveal average feature effects, Individual Conditional Expectation (ICE) plots expose the heterogeneous relationships hidden beneath these averages. Unlike PDPs that aggregate predictions across all instances, ICE plots show how each individual data point responds as a single feature varies, creating multiple overlapping curves rather than one averaged line.

The mathematical foundation builds directly upon PDP methodology. For each observation i and feature xj, ICE computes f(xj, xc(i)) across a grid of xj values while holding all other features xc constant at their original values for that instance. This generates individual prediction trajectories that reveal subgroup behaviors PDPs might obscure through averaging.

ICE plots excel at detecting feature interactions and heterogeneous effects. When PDP shows a flat line suggesting no relationship, ICE often reveals opposing trends—some instances increasing while others decrease—that cancel out in the average. This capability proves invaluable for identifying bias patterns, understanding model fairness across demographic groups, or debugging unexpected predictions for specific cases.

Implementation requires generating multiple synthetic versions of each data point with only the target feature modified, then plotting all resulting prediction curves. Centered ICE plots enhance interpretability by subtracting each curve's starting value, focusing attention on relative changes rather than absolute prediction levels. While computationally similar to PDP construction, ICE visualization can become cluttered with large datasets, typically requiring sampling or aggregation techniques for practical deployment.

Local Interpretable Model-agnostic Explanations (LIME)

While ICE plots reveal how individual instances respond to feature changes, Local Interpretable Model-agnostic Explanations (LIME) takes a fundamentally different approach to understanding model predictions. Instead of examining feature variations globally, LIME explains why a model made a specific prediction for a particular instance by creating locally faithful approximations using interpretable models.

LIME operates through strategic perturbation around the instance of interest. For tabular data, it samples from feature distributions while maintaining realistic value ranges. For text, it systematically removes words to observe prediction changes. For images, it masks superpixel regions to identify influential visual components. These perturbations generate a neighborhood dataset that LIME weights by proximity to the original instance.

The technique then trains an interpretable model—typically sparse linear regression—on this weighted neighborhood data. The resulting coefficients reveal which features contribute positively or negatively to the specific prediction. This local approximation provides intuitive explanations: highlighted words in sentiment analysis, influential image regions in computer vision, or critical feature thresholds in tabular predictions.

LIME's model-agnostic nature makes it particularly valuable for debugging individual predictions and building stakeholder trust. Unlike ICE plots that show feature-prediction relationships across the dataset, LIME answers "Why did the model classify this specific email as spam?" by identifying the exact words or patterns that influenced that decision, making it indispensable for explaining high-stakes individual predictions in healthcare, finance, and legal applications.

SHAP (SHapley Additive exPlanations)

While LIME operates on local neighborhoods, SHAP (SHapley Additive exPlanations) provides a unified theoretical framework rooted in cooperative game theory. Unlike approximation-based methods, SHAP guarantees mathematically consistent explanations by treating each feature as a "player" and the prediction as a cooperative "payout." This framework satisfies three critical properties: local accuracy (feature attributions sum to the difference between prediction and baseline), consistency (features with increased contributions never receive decreased attribution), and missingness (unused features receive zero attribution). TreeSHAP enables efficient computation for tree-based models, while KernelSHAP provides model-agnostic explanations. SHAP's visualizations—including force plots, summary plots, and dependence plots—offer both local and global interpretability insights, making it particularly valuable for high-stakes applications requiring regulatory compliance and stakeholder trust in machine learning decisions.

Conclusions

Model interpretability techniques provide essential insights into complex machine learning systems, each offering unique perspectives and advantages. While PDPs and ICE plots excel at visualizing feature impacts, LIME and SHAP provide detailed local explanations. Together, these tools enable practitioners to build more transparent, trustworthy, and effective AI systems.

Understanding AI Agents vs Orchestrated Workflows

As artificial intelligence revolutionizes enterprise operations, executives face a critical decision between autonomous AI agents and orchestrated workflows. This strategic choice impacts organizational efficiency, scalability, and competitive advantage. Understanding the distinct capabilities, limitations, and use cases of each approach is essential for making informed decisions that align with business objectives and technological readiness.

Understanding AI Agents vs Orchestrated Workflows

As artificial intelligence revolutionizes enterprise operations, executives face a critical decision between autonomous AI agents and orchestrated workflows. This strategic choice impacts organizational efficiency, scalability, and competitive advantage. Understanding the distinct capabilities, limitations, and use cases of each approach is essential for making informed decisions that align with business objectives and technological readiness.

Understanding AI Agents vs Orchestrated Workflows

A Comprehensive Framework for Enterprise AI Implementation Strategy

To build an effective enterprise AI strategy, organizations must first understand the fundamental distinction between AI agents and workflows. AI agents operate autonomously in dynamic environments, while workflows excel at automating predefined, rule-based sequences. This distinction shapes how companies can best deploy AI across their operations.Understanding the Core Components

AI implementation strategies typically include:- Automated workflows for routine, predictable tasks

- Autonomous agents for complex, dynamic scenarios

- Hybrid systems combining both approaches

- Integration layers connecting AI with existing systems

- Governance frameworks ensuring responsible AI use

Selecting the Right Automation Model

Choose workflows when you need:- Consistent, repeatable processes

- Clear audit trails

- Predictable outcomes

- High-volume, routine tasks

- Variable scenarios requiring judgment

- Complex decision-making needs

- Dynamic customer interactions

- Situations needing real-time adaptation

Business Use Cases and Applications

Common enterprise applications include:- Customer Service: Agents handle complex inquiries while workflows process routine requests

- Finance: Workflows manage transactions while agents detect fraud patterns

- Supply Chain: Agents optimize routing while workflows handle order processing

- HR: Workflows manage onboarding while agents assist with recruitment

- IT Support: Agents troubleshoot issues while workflows handle password resets

Decision-Making Framework for Executives

When evaluating AI implementation options:- Assess task complexity and variability

- Calculate potential ROI for each approach

- Consider existing system integration needs

- Evaluate security and compliance requirements

- Factor in staff training and change management

Building a Scalable Framework

To ensure scalability start with pilot projects in low-risk areas- Document successful patterns and approaches

- Build reusable components and templates

- Create clear governance guidelines

- Establish metrics for measuring success

Hybrid Implementation Strategies

Most enterprises benefit from a hybrid approach where:- Workflows handle structured, repetitive processes

- Agents manage complex, variable tasks

- Both systems share data and insights

- Integration layers enable seamless operation

- Monitoring tools track performance across both

Conclusions

The choice between autonomous agents and orchestrated workflows isn't binary - successful enterprises often implement hybrid approaches tailored to their specific needs. By understanding the strengths and limitations of each model, organizations can create robust, scalable AI automation strategies that drive business value while maintaining necessary control and oversight.Model Training: Revolutionizing AI Applications in Banking and Finance

Model Training: Transforming AI in Banking and Finance Operations

Estimated reading time: 7 minutes

Key Takeaways

- Model Training Defined: It's the process of teaching AI systems to make accurate decisions or predictions using large sets of data.

- Importance Highlighted: Mastering model training enables financial institutions to execute complex tasks seamlessly.

- Key Concepts Preview: This blog will explore Supervised Fine-Tuning (SFT), Reinforcement Learning with Human Feedback (RLHF), Retrieval-Augmented Generation (RAG), and the role of Search & Agents in enhancing AI Data Management.

Table of Contents

- Model Training: Transforming AI in Banking and Finance Operations

- Introduction

- Section 1: Overview of AI in Banking and Finance Operations

- Section 2: Understanding Model Training

- Section 3: Supervised Fine-Tuning (SFT)

- Section 4: Reinforcement Learning with Human Feedback (RLHF)

- Section 5: Retrieval-Augmented Generation (RAG)

- Section 6: Search & Agents in AI Data Management

- Section 7: Challenges and Considerations in AI Data Management

- Section 8: The Future of AI in Banking and Finance

- Conclusion

Introduction

Model Training is revolutionizing the way AI is applied in banking and finance operations. By harnessing the power of AI, financial institutions are achieving unprecedented levels of efficiency and service quality. Understanding and implementing advanced model training techniques is crucial for fully leveraging AI's potential in banking and finance operations.- Model Training Defined: It's the process of teaching AI systems to make accurate decisions or predictions using large sets of data.

- Importance Highlighted: Mastering model training enables financial institutions to execute complex tasks seamlessly.

- Key Concepts Preview: This blog will explore Supervised Fine-Tuning (SFT), Reinforcement Learning with Human Feedback (RLHF), Retrieval-Augmented Generation (RAG), and the role of Search & Agents in enhancing AI Data Management.

Section 1: Overview of AI in Banking and Finance Operations

- Define AI in the Financial Context: AI simulates human intelligence in machines, enabling them to learn, reason, and correct themselves.

- Transformation Through AI:

- Operational Efficiency: AI automates routine tasks, reduces errors, and accelerates processes.

- Enhanced Customer Experience: AI enables personalization and quick responses to customer queries.

- Accurate Decision-Making: AI analyzes massive datasets for precise decision-making.

- Specific Applications:

- Fraud Detection: AI scrutinizes transaction patterns to spot fraud.

- Risk Assessment: Utilizes machine learning for creditworthiness evaluations and market risk predictions.

- Personalized Services: Offers customized financial advice and products.

- Process Automation: Facilitates data entry and compliance checks in back-office operations.

- Research Findings: AI in banking is predicted to save the industry around $1 trillion by 2030. Source URL

Section 2: Understanding Model Training

- Model Training Defined: It involves adjusting an AI model's parameters through data exposure, enabling pattern recognition and decision-making.

- Importance in Finance: Effective training equips AI with the ability to manage complex financial operations efficiently.

- Applications in Banking and Finance:

- Analyzing Financial Data: Models detect trends and anomalies.

- Predicting Market Trends: AI forecasts stock prices and market behavior.

- Automating Decision-Making: Handles credit approvals and investment choices.

- Emphasize: "Model Training" is integral to optimizing financial systems.

Section 3: Supervised Fine-Tuning (SFT)

- SFT Defined: Fine-tunes pre-trained AI models using labeled data specific to tasks.

- How SFT Works: It tailors a general model for specific tasks with relevant datasets.

- Role in Finance:

- Credit Scoring Models: Fine-tuning to assess creditworthiness.

- Fraud Detection Systems: Enhanced using known fraudulent and legitimate data.

- Customer Segmentation: Models tailored for targeted marketing.

- Benefits:

- Gains accuracy and task relevance.

- Reduces time and resources compared to building from scratch.

- Research Findings: SFT enhances AI capabilities in banking. Source URL

Section 4: Reinforcement Learning with Human Feedback (RLHF)

- RLHF Defined: Marries machine learning with human input for improved AI performance.

- How RLHF Works: Involves AI learning from a feedback loop that includes human guidance.

- Applications in Finance:

- Trading Algorithms: AI evolves trading strategies with expert feedback.

- Customer Service Chatbots: Adapt interactions based on feedback.

- Risk Assessment Models: Leverages human expertise for precise predictions.

- Benefits:

- Merges human intelligence with AI.

- Adapts effectively to financial challenges.

- Research Findings: RLHF showcases significant success in banking AI applications. Source URL

Section 5: Retrieval-Augmented Generation (RAG)

- RAG Defined: Integrates information retrieval with text generation for precise results.

- How RAG Works: AI retrieves pertinent data during content generation.

- Utility in Banking:

- Financial Reports: Generates comprehensive reports with real-time data.

- Customer Queries: Provides precise answers using updated info.

- Regulatory Compliance: Aids compliance via relevant data retrievals.

- Benefits: Generates accurate, timely outputs by using current information.

- Research Findings: RAG significantly boosts customer satisfaction in finance. Source URL https://editingdestiny.duckdns.org/retrieval-augmented-generation-ai-revolution

Section 6: Search & Agents in AI Data Management

- Search Algorithms and AI Agents Defined:

- Search Algorithms: Locate specific data swiftly.

- AI Agents: Autonomous programs that perceive and act within environments.

- Functions in Data Management: Ensure efficient data handling and decision automation.

- Applications in Finance:

- Portfolio Management: AI adjusts investments using real-time data.

- Real-Time Analysis: Monitors markets for trends using search and analysis.

- Automated Trading: Executes trades based on strategic algorithms.

- Benefits: Enhances responsiveness and operational efficiency in data handling.

- Research Findings: Demonstrates enhanced trading efficiency through AI agents. Source URL

Section 7: Challenges and Considerations in AI Data Management

- Data Privacy and Security:

- Issues: Threats like data breaches.

- Solutions: Employ encryption and stringent access measures.

- Regulatory Compliance:

- Issues: Compliance with laws like GDPR.

- Solutions: Integrate compliance checks within AI operations.

- Ethical Considerations:

- Issues: Avoiding biased AI decisions.

- Solutions: Implement ethical frameworks and audits.

- Potential Biases in AI Models:

- Issues: Skewed decisions due to biased data.

- Solutions: Use diverse datasets and employ model audits.

- Research Findings: Emphasizes the role of ethics in AI deployment in financial services. Source URL

Section 8: The Future of AI in Banking and Finance

- Upcoming Trends:

- Generative AI for Personalized Advice: Custom financial planning through AI.

- Advanced Risk Management: Real-time AI-based monitoring.

- Sophisticated Trading Systems: AI employed in high-frequency trading.

- Advancements in Model Training: Innovations in self-supervised and transfer learning methods.

- Staying Ahead: Importance of embracing AI advancements for staying competitive.

- Research Findings: Forecasts AI's transformative impact on banking. Source URL

Conclusion

- Recap Importance of Model Training: Highlight the transformative impact of SFT, RLHF, and RAG on financial operations.

- Emphasize Understanding: Grasping these cutting-edge technologies is imperative for maximizing AI potential.

- Encourage Adaptation: Urge financial industries to keep abreast of AI advancements.

- Balance Innovation and Responsibility: Stress the importance of embracing innovation while addressing challenges responsibly.

Fundamentals of Tokenization in Language Processing

In the era of advanced artificial intelligence, Large Language Models (LLMs) have revolutionized how machines process and generate human language. At the heart of these sophisticated systems lies a crucial preprocessing technique called Byte Pair Encoding (BPE). This tokenization method bridges the gap between raw text and the numerical representations that LLMs can understand, making it possible for models to process language efficiently and effectively.

Fundamentals of Tokenization in Language Processing

A Deep Dive into How Byte Pair Encoding Powers Large Language Models

Large Language Models (LLMs) process text in a fundamentally different way than humans do. Instead of reading word by word, they work with tokens - smaller units that can represent characters, parts of words, or entire words. At the heart of this process is Byte Pair Encoding (BPE), a clever technique that breaks down text into manageable pieces.

Why Tokens Matter

Think of tokens as the basic building blocks LLMs use to understand language. Before an LLM can process any text, it needs to convert that text into numbers. This conversion happens through tokenization, and BPE is one of the most effective ways to do it.

For example, the word "understanding" might be split into tokens like "under" and "standing" because these pieces appear frequently in English text. This is more efficient than processing the word character by character, and more flexible than treating each complete word as a single token.

The Power of BPE

BPE brings several key advantages to language models:

- It finds common patterns in text automatically

- It works across different languages without modification

- It handles rare words by breaking them into familiar pieces

- It keeps vocabulary size manageable while maintaining meaning

Consider how BPE handles the word "cryptocurrency." Instead of needing a single token for this relatively new word, BPE might break it into "crypto" and "currency" - pieces it already knows from other contexts. This allows LLMs to understand new combinations of familiar concepts.

Byte Pair Encoding: Core Mechanics

Byte Pair Encoding (BPE) transformed from a simple text compression method into the backbone of modern language model tokenization. But how does it actually work?

The Core BPE Algorithm

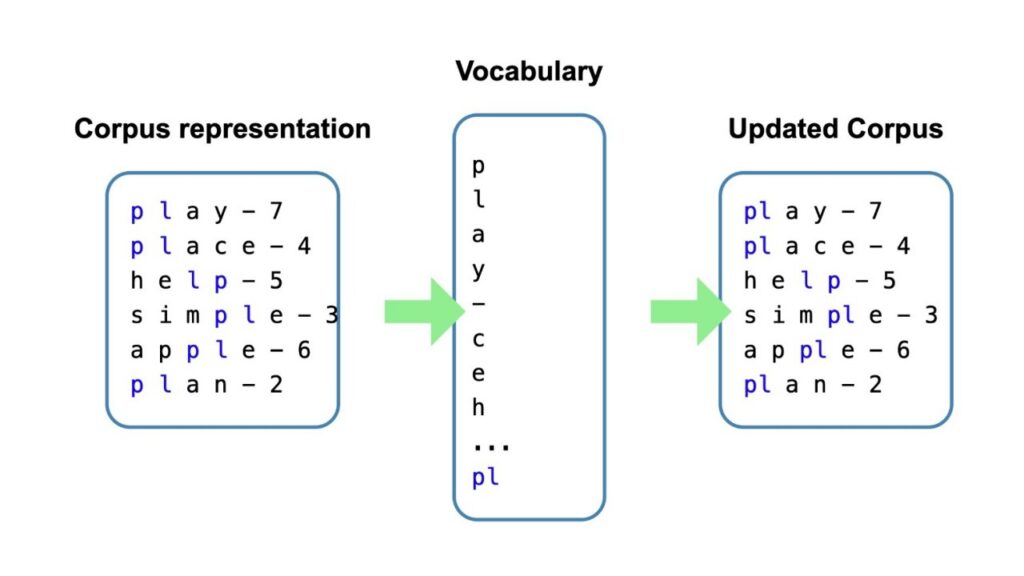

BPE follows a straightforward process:

- Start with individual characters as the base vocabulary

- Count all adjacent character pairs in the training text

- Merge the most frequent pair into a new token

- Repeat until reaching the desired vocabulary size

For example, if "er" appears frequently in words like "lower," "higher," and "faster," BPE combines these characters into a single token. This process builds an efficient vocabulary that captures common patterns in the language.

Why BPE Shines in Language Models

BPE offers several key advantages that make it ideal for Large Language Models (LLMs):

- Handles unknown words by breaking them into familiar subwords

- Balances vocabulary size and text coverage

- Works across multiple languages without modification

- Preserves meaningful word components (like prefixes and suffixes)

From Text to Tokens: The Process

When an LLM processes text, the BPE tokenizer:

- Converts the input text to UTF-8 bytes

- Applies the learned merge rules in order

- Splits the text into tokens based on the vocabulary

Consider the word "unstoppable". A BPE tokenizer might split it into "un" + "stop" + "able", using common subwords it learned during training. This helps the model understand the meaning through familiar components.

Technical Implementation

Modern LLMs use optimized BPE implementations that improve on the basic algorithm. For instance, GPT models use a variant called byte-level BPE, which ensures any Unicode text can be processed without special handling.

The vocabulary size varies by model:

- Most LLMs use 30,000 to 50,000 tokens

- Larger models may use over 100,000 tokens

- The size balances coverage against memory and processing requirements

BPE's efficiency comes from its ability to find the optimal balance between token frequency and meaningful subword units. The algorithm tracks usage statistics and merges pairs that maximize compression while preserving linguistic patterns.

Practical Benefits

This approach delivers real advantages:

- Reduces token count for common phrases

- Maintains readability of rare words

- Supports efficient model training

- Enables cross-lingual capabilities

For example, technical terms like "neural" and "network" might each be single tokens because they appear often in AI literature, while rare words get split into interpretable pieces.

BPE's Role in Modern Language Models

Building on our understanding of BPE's core mechanics, let's explore how this tokenization method fundamentally shapes modern Large Language Models (LLMs). The way BPE breaks down text affects everything from model training to inference.

Why LLMs Need BPE

Modern language models process text as sequences of tokens, not raw characters. Byte Pair Encoding solves three critical challenges:

- Vocabulary size management - Instead of millions of whole words, BPE creates a smaller set of subword tokens

- Out-of-vocabulary handling - New or rare words can be broken into known subword pieces

- Compression efficiency - Common patterns get their own tokens, reducing sequence lengths

Impact on Model Architecture

BPE's design influences key aspects of LLM architecture:

- Input embedding size matches the BPE vocabulary size

- Position encodings align with BPE token sequences

- Attention mechanisms operate on BPE token boundaries

For example, GPT-3 uses a 50,257-token vocabulary created through BPE. This determines the size of its input embedding matrix and shapes how the model processes text.

Training Considerations

When training LLMs, BPE affects several key areas:

- Data preprocessing - Raw text must be consistently tokenized using the same BPE rules

- Batch construction - Sequences are padded to match BPE token lengths

- Loss calculation - The model predicts the next BPE token, not the next character or word

Semantic Understanding

Research shows that BPE tokenization influences how LLMs develop semantic understanding:

- Common words stay whole, preserving their semantic unity

- Morphemes (word parts that carry meaning) often become single tokens

- Related words share subword tokens, helping models recognize patterns

Practical Implications

The way BPE works affects how we use LLMs:

- Input processing must match the model's BPE tokenization exactly

- Token limits are based on BPE tokens, not raw characters

- Cost calculations for API calls often use BPE token counts

For multilingual models, BPE helps handle different scripts and character sets efficiently. It automatically adapts to the statistical patterns of each language in the training data.

Recent Advances

New research continues to improve how BPE works in LLMs:

- Topic modeling in BPE token space

- Optimized vocabulary selection for specific domains

- Enhanced handling of numerical and special characters

These improvements help LLMs better understand and generate text while maintaining computational efficiency.

Advanced Applications and Variations

The Core BPE Process

Byte Pair Encoding (BPE) works through a systematic process of identifying and combining frequent character pairs into new tokens. While the previous chapter covered its role in language models, let's examine exactly how it processes text:

- Start with individual characters as the base vocabulary

- Count all adjacent character pairs in the training data

- Merge the most frequent pair into a new token

- Update the text with the new merged tokens

- Repeat until reaching the target vocabulary size

A Practical Example

Let's see how BPE handles the word "learning" step by step:

- Initial tokens: l, e, a, r, n, i, n, g

- First merge: Most common pair "in" becomes "in"

- Result: l, e, a, r, n, in, g

- Next merge: If "ea" is frequent, it becomes one token

- Result: l, ea, r, n, in, g

Implementation Details

Modern language models typically use vocabularies of 50,000 to 100,000 tokens. The exact size balances compression against precision. Smaller vocabularies mean more tokens per word but better handling of rare cases. Larger vocabularies capture more complete words but need more memory.

Byte-Level Implementation

Byte-level BPE adds an important twist to the basic algorithm. Instead of working with raw characters, it:

- Converts text to UTF-8 bytes first

- Treats these bytes as the basic units

- Ensures any text can be tokenized without unknown tokens

- Handles all Unicode characters efficiently

Performance Optimizations

Real-world BPE implementations use several optimizations:

- Frequency caching: Store pair counts to avoid repeated scans

- Parallel processing: Split the corpus for faster pair counting

- Pruning: Remove rare tokens to prevent vocabulary bloat

- Regular expression acceleration: Use regex for faster token matching

Error Handling and Edge Cases

BPE includes built-in mechanisms for handling challenging text:

- Spaces get special treatment as word boundaries

- Numbers split into logical digit groups

- Punctuation marks become separate tokens

- Unicode symbols split into byte sequences

Memory and Speed Tradeoffs

The implementation involves key tradeoffs:

- Larger vocabularies mean faster processing but more memory use

- Smaller vocabularies compress better but need more tokens per word

- Cache size affects speed versus memory usage

- Preprocessing steps add initial overhead but improve runtime speed

These technical details show why BPE became the foundation for modern language models. It offers a practical balance of speed, memory efficiency, and linguistic understanding that scales well to massive datasets.

Future Developments and Optimization

The Foundation of Modern LLM Processing

Large Language Models (LLMs) process text through a critical first step: converting human-readable words into numbers. At the heart of this conversion lies Byte Pair Encoding (BPE), a clever method that breaks text into meaningful pieces called tokens.

BPE strikes a perfect balance between character-level and word-level tokenization. It creates a vocabulary of subword units that captures common patterns in language while keeping the total number of tokens manageable. Most modern LLMs use vocabularies of 50,000 to 100,000 tokens.

How BPE Works in Practice

The process follows these steps:

- Start with individual characters as tokens

- Count pairs of adjacent tokens

- Merge the most frequent pair into a new token

- Repeat until reaching the desired vocabulary size

This approach naturally discovers meaningful units like common prefixes (un-, re-), suffixes (-ing, -ed), and word stems. For example, "running" might become ["run", "ning"], allowing the model to recognize parts of new words it encounters.

Benefits for Language Models

BPE provides several key advantages:

- Efficient compression: Text sequences become 1.3x shorter on average

- Better handling of rare words by breaking them into familiar pieces

- Automatic discovery of meaningful language patterns

- Guaranteed encoding of any text through byte-level fallback

Modern Implementations

Current LLMs use sophisticated versions of BPE. Models like GPT-2, RoBERTa, and BERT implement byte-level BPE, which first converts text to UTF-8 bytes. This ensures the system can handle any character in any language.

Latest Developments

Recent advances in tokenization include:

- Dynamic vocabularies that adapt to different content types

- Improved handling of multiple languages in the same text

- Better processing of technical content and code

- More efficient token allocation for non-English languages

These improvements help models process text more naturally across languages and domains while maintaining computational efficiency.

Challenges and Future Directions

Current research focuses on several areas:

- Reducing token count differences between languages

- Handling languages with unique writing systems more effectively

- Developing context-aware tokenization methods

- Creating more efficient multilingual vocabularies

As models grow more sophisticated, tokenization continues to evolve, balancing the need for efficient processing with better language understanding across diverse contexts.

Conclusions

BPE tokenization represents a crucial breakthrough in making large language models practical and effective. Its elegant balance between efficiency and effectiveness has made it the backbone of modern NLP systems. As language models continue to evolve, BPE and its variants will likely remain fundamental to their architecture, while new innovations build upon its solid foundation.

Defining Fidelity Risk in AI Document Processing

As organizations increasingly rely on artificial intelligence for document processing and data extraction, the concept of fidelity risk has become paramount. This risk encompasses the potential discrepancies between source documents and AI-generated outputs, including issues like hallucinations, semantic drift, and information misrepresentation. Understanding and managing these risks is crucial for maintaining data integrity and ensuring reliable information extraction.

As organizations increasingly rely on artificial intelligence for document processing and data extraction, the concept of fidelity risk has become paramount. This risk encompasses the potential discrepancies between source documents and AI-generated outputs, including issues like hallucinations, semantic drift, and information misrepresentation. Understanding and managing these risks is crucial for maintaining data integrity and ensuring reliable information extraction.

Defining Fidelity Risk in AI Document Processing

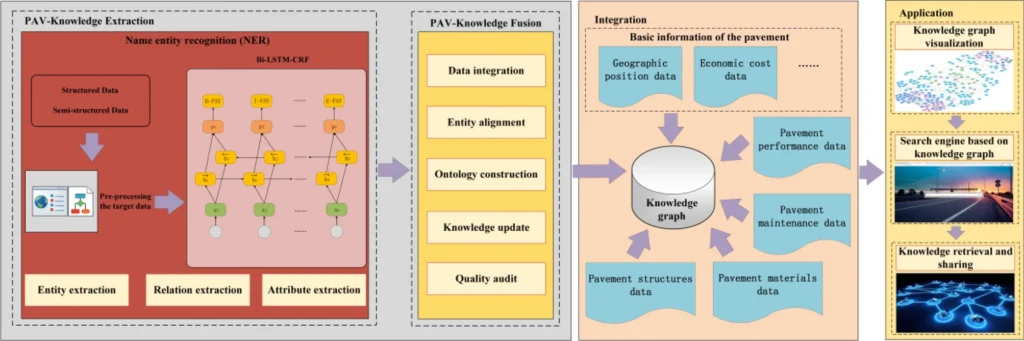

Fidelity risk in AI document processing represents a fundamental challenge where automated systems fail to maintain accurate correspondence between source documents and extracted information. This risk manifests across multiple dimensions, from basic data extraction errors to complex semantic drift that undermines information integrity. The core manifestation of fidelity risk emerges through Named Entity Recognition failures, where AI systems struggle with ambiguous references, domain-specific terminology, and contextual disambiguation. These failures compound when processing diverse document formats, creating cascading effects throughout downstream applications. Document summarization amplifies fidelity concerns through compression artifacts and selective omission of critical details. AI models frequently introduce subtle distortions during abstraction, where paraphrasing alters meaning or eliminates nuanced context essential for accurate interpretation. Knowledge graph construction represents perhaps the most complex fidelity challenge, requiring accurate entity extraction, relationship mapping, and semantic consistency across interconnected data points. Errors propagate through graph structures, creating systemic data misrepresentation that affects analytical outcomes. The business implications of AI fidelity failures extend beyond technical accuracy to encompass regulatory compliance, operational reliability, and decision-making integrity. Organizations must establish comprehensive validation frameworks that combine automated quality metrics with human oversight, particularly for high-stakes applications where fidelity errors carry significant consequences.AI Hallucinations and Information Integrity

When AI hallucinations occur in document processing, they represent a critical threat to information integrity, where AI systems generate plausible but factually incorrect outputs that were never present in the source documents. Unlike traditional processing errors, hallucinations create fabricated entities, relationships, or numerical values that appear authentic but fundamentally compromise the trustworthiness of extracted data. This phenomenon becomes particularly dangerous in financial document processing, where research indicates that 68% of extraction errors stem from hallucinated numerical values, while 32% involve incorrect entity relationships. The implications extend beyond isolated inaccuracies to systematic data misrepresentation that can cascade through business operations. When AI systems fill gaps in ambiguous documents with invented information, or when pattern completion mechanisms override actual content, the resulting outputs maintain apparent coherence while being fundamentally unreliable. These integrity violations become especially problematic in knowledge graph construction, where hallucinated entities can create false connections that persist and propagate errors throughout interconnected data structures. Mitigation requires grounding techniques such as retrieval-augmented generation, which constrains AI outputs to explicitly documented information, combined with post-processing validation that cross-checks extracted data against source documents. Provenance tracking and cryptographic protections further ensure that any alterations to processed information remain detectable, maintaining an auditable chain of data transformation that preserves accountability in AI-powered extraction workflows.Semantic Drift and Knowledge Graph Accuracy

Beyond the immediate issue of hallucinations lies a more subtle but equally critical challenge: semantic drift within document processing workflows. Unlike outright fabrications, semantic drift represents the gradual erosion of meaning as information traverses multiple processing stages, from initial extraction through knowledge graph integration. Semantic drift manifests when AI systems progressively alter conceptual relationships during data transformation, compression, or summarization processes. In named entity recognition tasks, this drift occurs when contextual clues become disconnected from their original semantic anchors, causing entities to lose their precise meaning or acquire unintended associations. The phenomenon is particularly pronounced in multi-step processing pipelines where each transformation introduces subtle distortions that compound over time. Knowledge graphs serve as both a solution and a potential amplifier of drift. While they provide structured semantic relationships that can detect and prevent meaning degradation through contextual validation, they also create new vectors for semantic instability when entity relationships are incorrectly updated or when conflicting information sources introduce inconsistencies. Effective drift management requires implementing information integrity monitoring through cosine similarity thresholds and relationship validation protocols that maintain semantic coherence across processing stages. The challenge intensifies when dealing with evolving document corpora where new terminology and relationships continuously emerge. Organizations must balance system adaptability with semantic stability, ensuring that data fidelity remains intact while allowing for legitimate conceptual evolution in their knowledge bases.Measuring and Monitoring Data Extraction Accuracy

Building on the semantic challenges addressed in the previous chapter, establishing robust measurement and monitoring systems becomes critical for maintaining data extraction accuracy in AI document processing workflows. Effective accuracy monitoring requires implementing comprehensive metrics that capture both the quality and completeness of extracted information. Precision, recall, and F1 score form the cornerstone of extraction accuracy measurement, particularly for structured data extraction scenarios. Precision metrics evaluate the proportion of correctly extracted fields against all extracted fields, while recall measures the completeness of extraction by comparing correctly identified fields to all relevant fields present in source documents. The F1 score provides a balanced assessment, offering a single metric that harmonizes both precision and recall considerations. Beyond traditional accuracy metrics, monitoring systems must incorporate field-level accuracy tracking and word error rate (WER) measurements to capture granular performance variations across different document types and extraction tasks. Real-time confidence scoring enables dynamic quality assessment, allowing systems to flag potentially problematic extractions for human review before they propagate through downstream processes. Continuous monitoring frameworks should establish baseline accuracy thresholds tailored to specific use cases and document categories. Iterative evaluation protocols using varied prompts and document samples help identify accuracy drift patterns, enabling proactive adjustments to extraction models before fidelity degradation compromises operational integrity. This systematic approach to accuracy measurement creates the foundation for implementing the risk mitigation strategies explored in the following chapter.Risk Mitigation Strategies and Best Practices

Building on established monitoring frameworks, implementing comprehensive risk mitigation strategies requires multi-layered validation protocols and proactive quality controls. Effective strategies begin with robust input preprocessing, where documents undergo standardization and format validation before entering AI pipelines, significantly reducing downstream AI hallucinations and extraction errors. Critical mitigation approaches include implementing retrieval-augmented generation (RAG) frameworks that ground model outputs in verified source documents, preventing semantic drift during processing. Confidence scoring mechanisms flag low-certainty extractions for human review, while automated cross-validation compares outputs against known ground truth datasets. For data extraction workflows, establishing validation checkpoints at each processing stage ensures data quality integrity through boundary checks, completeness verification, and format consistency testing. Information integrity protection requires combining extractive document summarization with named entity recognition pipelines that feed validated entities into knowledge graphs for relationship verification. Human-in-the-loop oversight provides final validation for high-stakes documents, while continuous monitoring dashboards track extraction accuracy trends, enabling proactive intervention when fidelity degradation occurs. This comprehensive approach maintains document processing reliability while preserving semantic accuracy across diverse document types and formats.Conclusions

Managing fidelity risk in AI-powered document processing requires a multi-faceted approach combining technical solutions with robust validation processes. As AI systems continue to evolve, organizations must remain vigilant in monitoring and maintaining data accuracy while implementing appropriate safeguards to ensure reliable information extraction and processing.AI is Transforming Banking and Finance: Use Cases and Trends

```html

AI is Transforming Banking and Finance: Use Cases and Trends

Estimated reading time: 6 minutes

Key Takeaways

- AI is rapidly being adopted in banking to enhance efficiency, security, and customer experience.

- Cost reduction is a major driver, with potential savings of up to 60% in risk and compliance.

- Personalization is a key goal, moving banks toward customer-centric models.

- AI-powered fraud detection identifies suspicious activities in real-time.

- Chatbots offer 24/7 customer support and improve operational efficiency.

- AI enhances credit scoring by analyzing alternative data for more inclusive lending.

Table of contents

AI is no longer a futuristic concept in banking and finance; it’s a present-day reality reshaping the industry. This blog post explores the current trends, key use cases, and future implications of artificial intelligence in the financial sector.

The Rise of AI in Financial Services

Banks and financial institutions are aggressively adopting AI to stay competitive and meet rising client expectations – source: [https://www.ey.com/en_gr/insights/financial-services/how-artificial-intelligence-is-reshaping-the-financial-services-industry](https://www.ey.com/en_gr/insights/financial-services/how-artificial-intelligence-is-reshaping-the-financial-services-industry) – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025](https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025) – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

Cost reduction is a vital driver, as AI automation can slash operational expenses—such as risk and compliance checks—by up to 60% in coming years – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025](https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025) – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025] – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

Stricter regulatory compliance needs prompt automated checks, elevated accuracy, and reduced false positives.

The demand for personalized banking experiences is pushing banks to shift from product-centric to customer-centric models using AI—delivering tailored advice, custom product bundles, and relevant services to each client – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025](https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025) – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

Keywords: how AI is transforming financial services, machine learning in banking

Key AI Use Cases in Banking

AI-Powered Fraud Detection & Risk Management

AI-driven fraud detection enables banks to identify suspicious activities and fraudulent transactions in real time by analyzing vast volumes of payment and behavioral data – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

Machine learning algorithms spot unusual patterns, learn from new fraud schemes, and improve over time, enhancing both fraud protection and the customer experience by reducing false positives – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

AI is also vital for risk management, enabling more precise risk scoring and better underwriting decisions across the financial sector – source: [https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf](https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financial_Services_2025.pdf).

Personalized Customer Service with Chatbots

AI-powered chatbots and virtual assistants offer 24/7 support, handling everything from balance inquiries to complex product advice – source: [https://www.ey.com/en_gr/insights/financial-services/how-artificial-intelligence-is-reshaping-the-financial-services-industry](https://www.ey.com/en_gr/insights/financial-services/how-artificial-intelligence-is-reshaping-the-financial-services-industry) – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025](https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025) – source: [https://www.posh.ai/blog/future-banking-trends-to-watch-in-2025](https://www.posh.ai/blog/future-banking-trends-to-watch-in-2025).

Examples like Bank of America’s Erica and Capital One’s Eno set the standard for always-available, context-aware service.

These systems boost operational efficiency and satisfaction by providing instant, reliable answers, freeing human agents to handle higher-value interactions – source: [https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025](https://www.accenture.com/us-en/insights/banking/top-10-trends-banking-2025) – source: [https://www

AI Evaluation: Best Practices for Testing and Assessing Generative AI Tools

AI Evaluation: Best Practices for Testing and Evaluating Gen AI Tools

Estimated reading time: 10 minutes

Key Takeaways

-

- Understanding the importance of AI Evaluation in ensuring effective and safe Gen AI tools.

-

- Exploring key evaluation metrics and performance measurement strategies.

-

- Identifying challenges in Gen AI evaluation and proposing solutions.

-

- Learning from real-world applications and case studies.

- Anticipating future trends in Gen AI evaluation.

Table of Contents

Introduction

Generative AI tools, often referred to as Gen AI, are dramatically reshaping various industries, from content creation to healthcare. These systems are capable of generating new content, such as text, images, and code. However, their effectiveness and reliability are deeply contingent on thorough AI Evaluation. This blog post delves into the best practices for testing and evaluating Gen AI tools to ensure they are effective, relevant, and safe, using appropriate Gen AI Evaluation metrics and performance measurements.

Understanding Gen AI Evaluation

What Is Generative AI?

Gen AI systems are capable of creating new content rather than merely analyzing old data. These systems find applications in diverse areas, such as:

-

- Text Generation: Improving chatbots and aiding content creators.

-

- Image Generation: Assisting in design and art creation.

- Code Generation: Helping software development processes. AI Automation in Finance: Revolutionizing Financial Services and Unlocking Innovation

Evaluation is essential for ensuring that Gen AI tools produce high-quality and relevant outputs. Proper evaluation reveals both the strengths and the potential biases of these models.

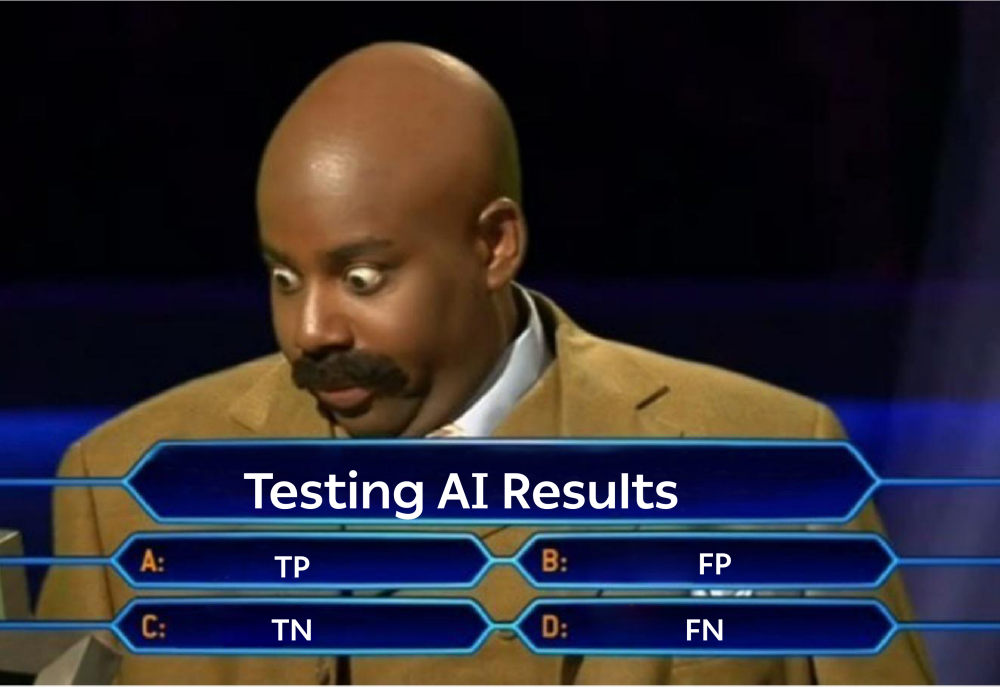

Setting the Ground for Evaluation Metrics

The Role of Evaluation Metrics

Evaluation metrics serve as objective standards to assess AI model effectiveness. They are crucial for understanding how a Gen AI tool performs over multiple dimensions.

Types of Evaluation Metrics

-

- Quality Metrics:

-

- Coherence: Evaluates logical consistency of generated content.

- Relevance: Assesses how closely the output aligns with the given input or context.

-

- Quality Metrics:

-

- Safety Metrics:

-

- Toxicity: Detects any harmful or offensive content.

- Bias Detection: Identifies prejudiced tendencies in model outputs. The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

-

- Safety Metrics:

- Performance Metrics:

-

- Response Time: Measures how swiftly a model can generate outputs.

- Resource Utilization: Evaluates the computational resources utilized by the model, impacting scalability.

-

Key Gen AI Evaluation Metrics

Generation Quality Metrics

-

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): This metric measures the quality of machine-generated summaries or translations by comparing them to human references [14].

-

- Perplexity: Used for language models, this metric measures how well a probability model predicts a sample. Lower values indicate better performance. Source: Addaxis AI.

- BLEU (Bilingual Evaluation Understudy): Commonly used for text translations, BLEU compares machine-generated to human-written references. Source: Addaxis AI.

Safety and Responsibility Metrics

-

- Toxicity: Evaluates the presence of harmful content to prevent the spread of inappropriate information.

- Bias Detection: Assesses outputs for unfair biases, ensuring ethical standards. The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

Performance Metrics

-

- Response Time: Evaluates the model’s speed, crucial for user satisfaction.

- Resource Utilization: Assesses computational needs, impacting cost and scalability. AI in Banking and Finance: Revolutionizing the Financial Sector

Performance Measurement Strategies

Best Practices for Measuring Gen AI Performance

1. Automated Evaluation

Tools such as Azure AI Foundry facilitate automated and scalable assessments of Gen AI models. This approach ensures efficiency and consistency. Source: Google Cloud.

2. Human-in-the-Loop Evaluation

Combining automated metrics with human insights captures subtleties such as creativity or contextual relevance. Source: DataStax.

3. Benchmarking Against Industry Standards

Comparing Gen AI tools against industry benchmarks helps identify areas for enhancement and competitive differentiation. Source: DataForce. AI in Banking and Finance: Revolutionizing the Financial Sector

4. Continuous Monitoring

Consistent monitoring helps detect and manage performance degradation over time. [13]

Challenges in Gen AI Evaluation

Common Challenges

-

- Subjectivity in Quality Assessment: Elements like creativity or relevance are hard to measure objectively.

-

- Data Limitations: Securing diverse datasets for evaluation is challenging.

-

- Evolving Standards: Rapid advancements in Gen AI technology require continual updates in evaluation criteria.

- Potential Biases: AI models may inherit biases from their training data.

Solutions

-

- Blend qualitative and quantitative assessments.

-

- Regularly refresh and diversify datasets.

- Keep up with industry updates and best practices.

Tools and Techniques for Effective Evaluation

Common Tools for AI Evaluation

Azure AI Foundry

Offers both automated metrics and human-in-the-loop assessments, covering a comprehensive evaluation spectrum. Source: Google Cloud.

FMEval

Provides a set of standardized metrics for assessing quality and responsibility in language models. [17]

OpenAI's Evaluation Framework

This framework provides diverse metrics to assess the quality, coherence, and relevance of AI outputs. Source: Addaxis AI.

Case Studies and Real-World Applications

Walmart: A Success in AI-Powered Inventory Management

Walmart implemented AI tools for inventory management, enhancing efficiency by 97% due to rigorous evaluation. Customizing evaluation metrics to align with operational goals was key. Source: Psico-Smart.

Mount Sinai Health System: Healthcare Innovation with Gen AI

By reducing hospital readmission rates by 20% through AI evaluation, Mount Sinai showcased the necessity of robust assessment in healthcare. The focus on data quality and ethics were lesson highlights. Source: Psico-Smart.

Lessons Learned

Tailored evaluation leads to better outcomes; continuous assessment results in notable performance improvements.

Future of Gen AI Evaluation

Emerging Trends

AI-Powered Evaluation

Using advanced AI models for Gen AI evaluation enhances scalability and provides context-aware assessments. Source: DataStax.

Ethical AI Evaluation

There is growing emphasis on the ethical impact of Gen AI tools, aligning outputs with societal and regulatory standards. [16] The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

Adaptive Evaluation Frameworks

Frameworks that adapt to new AI capabilities future-proof evaluation strategies. [11]

Evaluation methods must evolve with AI advancements. Continuous education and collaboration with industry bodies are essential.

Conclusion

Effective AI Evaluation is fundamental to maximizing the benefits of Gen AI tools. It ensures reliability, mitigates risks, and enhances user trust. Continuous learning and adaptation to evolving industry standards are crucial for sustained success.

Investing in comprehensive evaluation not only yields competitive advantages but also improves performance outcomes.

Call to Action

We invite you to share your experiences, challenges, or questions about Gen AI evaluation in the comments. For extended learning on Gen AI Evaluation metrics and performance measurement, explore resources from industry leaders like NIST. [16] Generative AI and the Future of Work in America: Transforming Jobs, Productivity, and Skills

Through diligent evaluation practices, your organization can harness the full potential of Gen AI tools.

Retrieval Augmented Generation: Transforming AI with Real-Time Knowledge Integration

Retrieval Augmented Generation: The Game-Changing AI Technology Revolutionizing Language Models

Estimated reading time: 8 minutes

Key Takeaways

- Retrieval Augmented Generation (RAG) enhances language models by incorporating external knowledge retrieval.

- RAG addresses critical limitations of traditional AI models like outdated information and lack of domain specificity.

- By combining retrieval and generative models, RAG provides accurate, current, and context-specific responses.

- RAG has diverse applications across industries, transforming sectors like healthcare, legal, and finance.

- Organizations adopting RAG can expect improved accuracy, transparency, and cost-effective AI solutions.

Table of Contents

- Understanding Retrieval Augmented Generation

- Why RAG Matters: Addressing Critical AI Limitations

- The Inner Workings of RAG

- Essential Components and Their Roles

- The Impressive Benefits of RAG Implementation

- Real-World Applications

- Navigating Challenges and Considerations

- Looking Ahead: The Future of RAG

- Frequently Asked Questions

Understanding Retrieval Augmented Generation

Retrieval Augmented Generation represents a significant leap forward in AI technology, enhancing the capabilities of large language models (LLMs) by incorporating external knowledge retrieval. According to AWS, this technique effectively bridges the gap between traditional language models and real-world information needs. Rather than relying solely on pre-trained knowledge, RAG enables AI systems to access and utilize current, relevant data from external sources.

Why RAG Matters: Addressing Critical AI Limitations

Traditional language models, despite their impressive capabilities, face several significant limitations that RAG aims to overcome. The Weka.io learning guide highlights four primary challenges that RAG addresses:

- The "Frozen in Time" Problem

Traditional LLMs operate with static knowledge from their training data, making them unable to access current information. RAG solves this by allowing real-time access to updated information sources. - Limited Domain Knowledge

Standard language models lack specialized knowledge for specific industries or companies. RAG enables the integration of domain-specific information, making AI systems more valuable for specialized applications. For example, integrating insights from AI in Banking and Finance: Revolutionizing the Financial Sector can enhance financial AI applications. - The Black Box Issue

Understanding how AI systems reach their conclusions has been a persistent challenge. RAG provides transparency by clearly identifying information sources. - AI Hallucinations

According to SuperAnnotate, one of the most significant challenges with traditional LLMs is their tendency to generate plausible but incorrect information. RAG significantly reduces this risk by grounding responses in verified external sources.

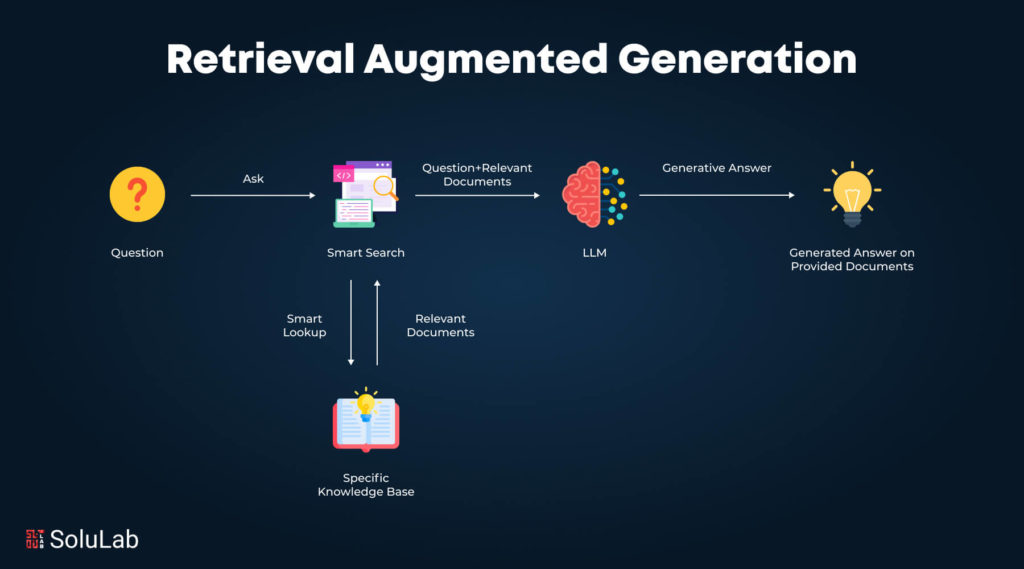

The Inner Workings of RAG

The RAG process operates through a sophisticated four-step approach:

- Indexing Phase

The system begins by processing and indexing unstructured data from various sources, creating a searchable knowledge base. - Retrieval Process

When a query is received, the system searches for and retrieves relevant information from the indexed data. - Augmentation Stage

The system combines the retrieved information with the original query, creating a context-rich input. - Generation Step

Finally, the language model generates a response based on both the query and the retrieved information.

Essential Components and Their Roles

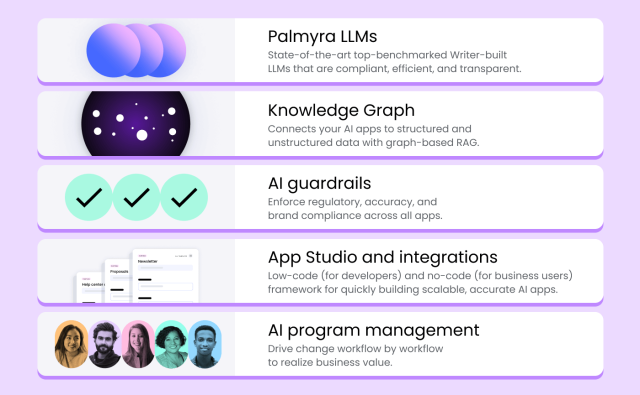

According to AWS, RAG systems rely on three crucial components:

- The Retriever

This component acts as the system's librarian, efficiently searching and retrieving relevant documents and facts from various knowledge sources. - The Language Model

A sophisticated pre-trained model that processes the combined information to generate coherent and contextually appropriate responses. - The Vector Database

This advanced storage system maintains documents as embeddings in high-dimensional space, enabling quick and efficient information retrieval.

The Impressive Benefits of RAG Implementation

The advantages of implementing RAG are substantial and measurable:

- 43% Improvement in Accuracy

Studies have shown that RAG-based responses demonstrate significantly higher accuracy compared to traditional fine-tuning approaches. - Real-time Information Access

RAG systems can access and utilize current information without requiring costly and time-consuming model retraining. - Specialized Knowledge Integration

Organizations can incorporate their proprietary information and specialized knowledge bases into AI responses. For instance, integrating solutions from AI Automation in Finance: Revolutionizing Financial Services and Unlocking Innovation can optimize financial decision-making processes. - Enhanced Transparency

RAG systems provide clear source citations, making responses more trustworthy and verifiable. - Cost-Effective Updates

Maintaining and updating RAG systems requires fewer resources compared to retraining entire language models.

Real-World Applications

According to Signity Solutions, RAG is transforming various industries:

- Healthcare

Medical professionals are using RAG systems to access current research, improve diagnoses, and provide better patient care by integrating up-to-date medical information. - Legal Services

Law firms are implementing RAG to assist lawyers with case research, providing relevant legal precedents and local law citations. - Customer Support

Companies are enhancing their chatbots with RAG technology to provide more accurate, company-specific information to customers. - Research and Development

Scientists and researchers are accelerating their work by using RAG systems for comprehensive literature reviews and hypothesis generation. - Content Creation

As reported by Glean, journalists and content creators are utilizing RAG to efficiently access and verify facts and figures, improving the quality and accuracy of their work. - Financial Services

Leveraging insights from AI in Banking and Finance: Revolutionizing the Financial Sector and AI Automation in Finance: Revolutionizing Financial Services and Unlocking Innovation, financial institutions are enhancing their AI-driven fraud detection and risk management capabilities.

Navigating Challenges and Considerations

While RAG represents a significant advancement, organizations must consider several important factors:

- Information Quality Control

The system's effectiveness heavily depends on the quality and relevance of the retrieved information. - Security Protocols

When handling sensitive or proprietary information, robust security measures must be implemented. - Technical Integration

Organizations need to carefully plan the integration of RAG systems with their existing AI infrastructure. Insights from AI Automation in Finance can guide the seamless incorporation of automation tools.

Looking Ahead: The Future of RAG

As artificial intelligence continues to evolve, RAG stands as a testament to the industry's commitment to improving AI capabilities. Its ability to combine the power of generative AI with dynamic, context-specific information retrieval marks a significant step forward in making AI systems more reliable, accurate, and practical for real-world applications.

The technology's potential to enhance decision-making, improve information accuracy, and provide transparent AI solutions makes it an invaluable tool for organizations across various sectors. As we move forward, RAG's role in shaping the future of AI applications appears increasingly significant, promising more intelligent, trustworthy, and capable AI systems for tomorrow's challenges.

Frequently Asked Questions

What is Retrieval Augmented Generation (RAG)?

RAG is an AI technique that enhances language models by incorporating external information retrieval, allowing them to access and utilize current, relevant data from various sources.

How does RAG improve AI accuracy?

By grounding responses in verified external sources, RAG reduces the risk of AI hallucinations and improves the accuracy and reliability of generated content.

What are the key components of a RAG system?

The key components include the Retriever, the Language Model, and the Vector Database, each playing a crucial role in information retrieval and response generation.

Which industries can benefit from RAG?

Industries such as healthcare, legal services, finance, customer support, research and development, and content creation can significantly benefit from implementing RAG systems.

What challenges should be considered when implementing RAG?

Organizations should consider information quality control, security protocols for sensitive data, and the technical integration with existing AI infrastructure.

AI-Powered PPT Generator

How I Built an AI That Turns Ideas into PowerPoint Slides

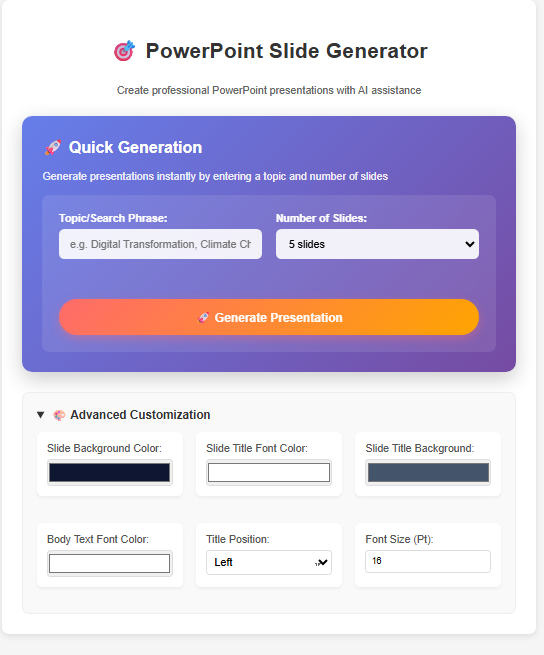

What if there is a product which can take your idea, do the research, find the relevant fact & figures and then use that data into nicely crafted PowerPoint presentation?

Almost everyone is using AI (LLMs) to do the research, however, while research has been automated, presentation is not and I personally find it significantly time consuming to create a PowerPoint presentation. So I applied my limited knowledge of AI-driven automation to see if this chore can be automated (90% I would aim for).

This question was the spark for Slider, a web application designed to do just that: turn a single search phrase into a fully-formed, downloadable PowerPoint presentation. This is the story of its development—a journey from a simple idea to a robust, resilient web service, fraught with subtle bugs, architectural pivots, and invaluable lessons in software engineering.

Chapter 1: The Spark of an Idea (The "Why")

The initial goal was ambitious but clear: create a tool that automates the entire presentation creation pipeline. A user should be able to provide a topic, say, "The Future of Renewable Energy," and in return, receive a ".pptx" file complete with a title slide, content slides with key points, and even data visualizations like charts and tables. The aim was to save hours of manual work and provide a solid, editable foundation that users could then refine.

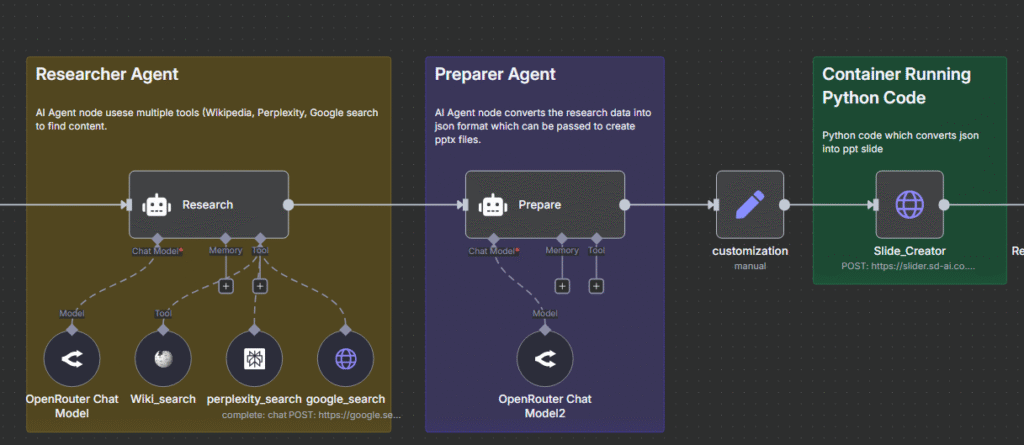

Chapter 2: The Initial Architecture (The "How")

To bring this idea to life, I settled on a modern, decoupled architecture:

- Frontend: A simple HTML form with a sprinkle of vanilla JavaScript.

- Backend: A Python server using FastAPI.

- The AI & Orchestration Layer: Leveraging n8n for workflow automation.

- The Presentation Generator:

python-pptxfor building slides.

The n8n Workflow

- FastAPI calls an n8n webhook with the search phrase.

- n8n generates structured JSON (titles, bullets, mock chart data).

- JSON is passed back to FastAPI, which builds the presentation.

Chapter 3: First Blood - The "Division by Zero" Bug

Error creating presentation: float floor division by zeroMatplotlib choked when AI returned empty data for charts. Fix: validate chart data before rendering.

Chapter 4: The Silent Killer - The Timeout Issue

httpx default timeout (60s) killed long AI workflows. Fix:async with httpx.AsyncClient(timeout=None) as client:

response = await client.post(N8N_WEBHOOK_URL, json=webhook_payload)Chapter 5: Improving the User Experience (The "Feel")

Fixing backend logic wasn’t enough—the real bottleneck was how it felt to users. An app that quietly sits there for minutes without feedback is indistinguishable from one that’s broken. I knew I had to design for perceived performance, not just raw speed. This led to the introduction of a dynamic loading system:- A spinner to visually confirm that the request was in progress.

- Rotating status updates such as “Contacting AI assistant…”, “Generating slides…”, and “Almost done…”.

- Clear error messages if something failed, so users weren’t left guessing.

Chapter 6: The Devil in the Details – Fonts and Aesthetics

Once the system was stable, smaller issues came into focus—and they mattered more than I expected. Font issues: My Docker container didn’t include theSegoe UI font that PowerPoint defaults to.

As a result, charts rendered with odd-looking fallback fonts, making them look unpolished.

The fix was to install fonts and rebuild the font cache inside the container.

Invisible text bug: Some slides came out with black text on dark backgrounds.

This wasn’t a crash—it was worse, because users thought the tool worked, but the slides were unreadable.

The workaround was a brute-force loop that:

- Iterated through every shape on every slide.

- Explicitly set the font color to white.

- Enforced consistent readability across the deck.

def is_valid_pptx(file_content: bytes) -> bool:

if not file_content:

return False

try:

file_stream = io.BytesIO(file_content)

Presentation(file_stream)

return True

except Exception:

return FalseConclusion: Lessons Learned and The Road Ahead

- Defensive Programming: Validate all data.

- Know Your Tools: Defaults matter (timeouts, libraries).

- User Experience is Paramount: Feedback beats silence.

- Build for Failure: Handle errors gracefully.

You can check out the source code for this project on GitHub. What features would you like to see next? Let me know in the comments!

AI in Banking and Finance: Revolutionizing the Financial Sector

AI in Banking and Finance: Revolutionizing the Financial Sector

Estimated reading time: 8 minutes

Key Takeaways

- AI is revolutionizing the banking and finance sector by enhancing efficiency, customer experience, and security.

- Integration of AI leads to improved operational efficiency through automation of routine tasks.

- AI enhances fraud detection and risk management, safeguarding assets and reducing financial crime.

- AI provides personalized banking services and improves customer satisfaction.

- Challenges include data privacy, algorithmic bias, and regulatory compliance which need to be addressed.

Table of contents

- AI in Banking and Finance: Revolutionizing the Financial Sector

- Key Takeaways

- Overview of AI in the Financial Sector

- How AI is Transforming Financial Services

- AI Use Cases in Banking

- Machine Learning in Banking

- AI-Driven Fraud Detection

- AI Risk Management in Finance

- Challenges and Ethical Considerations

- The Future of AI in Banking and Finance

- Conclusion

- Frequently Asked Questions

Artificial Intelligence (AI) in banking and finance is revolutionizing the financial sector, driving innovation, and transforming customer experiences. As AI continues to advance rapidly, its impact on various industries becomes increasingly significant. AI, defined as the simulation of human intelligence processes by machines—particularly computer systems—includes learning, reasoning, and self-correction. This blog post aims to inform readers about how AI is transforming banking and finance and explore various use cases and future trends.

Overview of AI in the Financial Sector

Integration of AI in Banking and Finance

The integration of AI in banking and finance is on the rise as financial institutions adopt AI technologies to stay competitive. From enhanced customer interactions to operational efficiencies, AI offers numerous benefits.

Benefits of AI in the Financial Sector

- Improved Operational Efficiency: Automating routine tasks with AI reduces operational costs and streamlines processes.

- Enhanced Customer Service: AI provides personalized services and 24/7 support, significantly improving customer satisfaction.

- Strengthened Security Measures: AI enhances fraud detection and risk management, safeguarding assets.

With the shift towards digital banking, powered by AI, financial services are becoming more accessible and efficient across the globe. Appinventiv

How AI is Transforming Financial Services

Enhanced Customer Experience

- AI-Powered Chatbots: AI-driven chatbots and virtual assistants, like Bank of America's Erica, serve millions, offering personalized support and advice.

- Faster Processes: AI accelerates loan approvals and account openings through automated systems. Appinventiv

Improved Operational Efficiency

- Task Automation: AI automates tasks such as data entry and transaction processing.

- Data Insights: AI-driven analysis provides better business insights and facilitates compliance reporting.

Advanced Fraud Detection

- Real-Time Monitoring: AI monitors transactions to spot suspicious patterns.

- Adaptive Algorithms: Machine learning algorithms evolve to combat new fraud techniques.

Risk Management

- Accurate Credit Scoring: AI provides more precise credit scoring models.

- Market Risk Assessment: AI predicts market risks and forecasts changes effectively.

AI Use Cases in Banking

Automated Customer Support

Banks employ AI-powered chatbots to handle customer queries efficiently. Bank of America's Erica is a notable example. Benefits include round-the-clock availability and swift response times. Appinventiv

Personalized Banking Experiences

AI analyzes customer data to offer personalized product recommendations and financial advice. JPMorgan Chase utilizes AI for personalized investment guidance. Rackspace Technology

Predictive Analytics

AI forecasts customer behavior, market trends, and potential risks. For instance, Wells Fargo uses AI to predict customer loan defaults. The Alan Turing Institute

Machine Learning in Banking

Defining Machine Learning

Machine learning, a subset of AI, focuses on developing systems that can learn from data and make decisions.

Applications of Machine Learning

- Credit Scoring: ML models assess creditworthiness, reducing default rates.

- Risk Assessment: Banks leverage ML for evaluating market risks.

- Market Predictions: ML algorithms forecast market trends for strategic investments.

AI-Driven Fraud Detection

Critical Role in Banking Security

AI plays a crucial role in enhancing fraud detection capabilities.