As organizations increasingly rely on artificial intelligence for document processing and data extraction, the concept of fidelity risk has become paramount. This risk encompasses the potential discrepancies between source documents and AI-generated outputs, including issues like hallucinations, semantic drift, and information misrepresentation. Understanding and managing these risks is crucial for maintaining data integrity and ensuring reliable information extraction.

Defining Fidelity Risk in AI Document Processing

Fidelity risk in AI document processing represents a fundamental challenge where automated systems fail to maintain accurate correspondence between source documents and extracted information. This risk manifests across multiple dimensions, from basic data extraction errors to complex semantic drift that undermines information integrity.

The core manifestation of fidelity risk emerges through Named Entity Recognition failures, where AI systems struggle with ambiguous references, domain-specific terminology, and contextual disambiguation. These failures compound when processing diverse document formats, creating cascading effects throughout downstream applications.

Document summarization amplifies fidelity concerns through compression artifacts and selective omission of critical details. AI models frequently introduce subtle distortions during abstraction, where paraphrasing alters meaning or eliminates nuanced context essential for accurate interpretation.

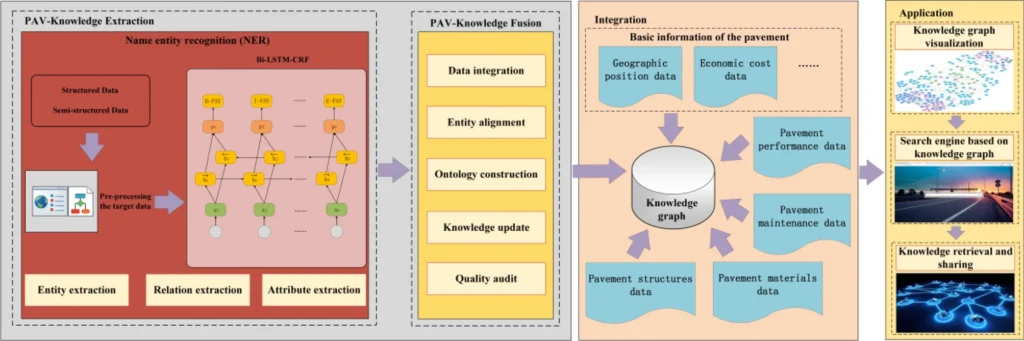

Knowledge graph construction represents perhaps the most complex fidelity challenge, requiring accurate entity extraction, relationship mapping, and semantic consistency across interconnected data points. Errors propagate through graph structures, creating systemic data misrepresentation that affects analytical outcomes.

The business implications of AI fidelity failures extend beyond technical accuracy to encompass regulatory compliance, operational reliability, and decision-making integrity. Organizations must establish comprehensive validation frameworks that combine automated quality metrics with human oversight, particularly for high-stakes applications where fidelity errors carry significant consequences.

AI Hallucinations and Information Integrity

When AI hallucinations occur in document processing, they represent a critical threat to information integrity, where AI systems generate plausible but factually incorrect outputs that were never present in the source documents. Unlike traditional processing errors, hallucinations create fabricated entities, relationships, or numerical values that appear authentic but fundamentally compromise the trustworthiness of extracted data. This phenomenon becomes particularly dangerous in financial document processing, where research indicates that 68% of extraction errors stem from hallucinated numerical values, while 32% involve incorrect entity relationships.

The implications extend beyond isolated inaccuracies to systematic data misrepresentation that can cascade through business operations. When AI systems fill gaps in ambiguous documents with invented information, or when pattern completion mechanisms override actual content, the resulting outputs maintain apparent coherence while being fundamentally unreliable. These integrity violations become especially problematic in knowledge graph construction, where hallucinated entities can create false connections that persist and propagate errors throughout interconnected data structures.

Mitigation requires grounding techniques such as retrieval-augmented generation, which constrains AI outputs to explicitly documented information, combined with post-processing validation that cross-checks extracted data against source documents. Provenance tracking and cryptographic protections further ensure that any alterations to processed information remain detectable, maintaining an auditable chain of data transformation that preserves accountability in AI-powered extraction workflows.

Semantic Drift and Knowledge Graph Accuracy

Beyond the immediate issue of hallucinations lies a more subtle but equally critical challenge: semantic drift within document processing workflows. Unlike outright fabrications, semantic drift represents the gradual erosion of meaning as information traverses multiple processing stages, from initial extraction through knowledge graph integration.

Semantic drift manifests when AI systems progressively alter conceptual relationships during data transformation, compression, or summarization processes. In named entity recognition tasks, this drift occurs when contextual clues become disconnected from their original semantic anchors, causing entities to lose their precise meaning or acquire unintended associations. The phenomenon is particularly pronounced in multi-step processing pipelines where each transformation introduces subtle distortions that compound over time.

Knowledge graphs serve as both a solution and a potential amplifier of drift. While they provide structured semantic relationships that can detect and prevent meaning degradation through contextual validation, they also create new vectors for semantic instability when entity relationships are incorrectly updated or when conflicting information sources introduce inconsistencies. Effective drift management requires implementing information integrity monitoring through cosine similarity thresholds and relationship validation protocols that maintain semantic coherence across processing stages.

The challenge intensifies when dealing with evolving document corpora where new terminology and relationships continuously emerge. Organizations must balance system adaptability with semantic stability, ensuring that data fidelity remains intact while allowing for legitimate conceptual evolution in their knowledge bases.

Measuring and Monitoring Data Extraction Accuracy

Building on the semantic challenges addressed in the previous chapter, establishing robust measurement and monitoring systems becomes critical for maintaining data extraction accuracy in AI document processing workflows. Effective accuracy monitoring requires implementing comprehensive metrics that capture both the quality and completeness of extracted information.

Precision, recall, and F1 score form the cornerstone of extraction accuracy measurement, particularly for structured data extraction scenarios. Precision metrics evaluate the proportion of correctly extracted fields against all extracted fields, while recall measures the completeness of extraction by comparing correctly identified fields to all relevant fields present in source documents. The F1 score provides a balanced assessment, offering a single metric that harmonizes both precision and recall considerations.

Beyond traditional accuracy metrics, monitoring systems must incorporate field-level accuracy tracking and word error rate (WER) measurements to capture granular performance variations across different document types and extraction tasks. Real-time confidence scoring enables dynamic quality assessment, allowing systems to flag potentially problematic extractions for human review before they propagate through downstream processes.

Continuous monitoring frameworks should establish baseline accuracy thresholds tailored to specific use cases and document categories. Iterative evaluation protocols using varied prompts and document samples help identify accuracy drift patterns, enabling proactive adjustments to extraction models before fidelity degradation compromises operational integrity. This systematic approach to accuracy measurement creates the foundation for implementing the risk mitigation strategies explored in the following chapter.

Risk Mitigation Strategies and Best Practices

Building on established monitoring frameworks, implementing comprehensive risk mitigation strategies requires multi-layered validation protocols and proactive quality controls. Effective strategies begin with robust input preprocessing, where documents undergo standardization and format validation before entering AI pipelines, significantly reducing downstream AI hallucinations and extraction errors.

Critical mitigation approaches include implementing retrieval-augmented generation (RAG) frameworks that ground model outputs in verified source documents, preventing semantic drift during processing. Confidence scoring mechanisms flag low-certainty extractions for human review, while automated cross-validation compares outputs against known ground truth datasets. For data extraction workflows, establishing validation checkpoints at each processing stage ensures data quality integrity through boundary checks, completeness verification, and format consistency testing.

Information integrity protection requires combining extractive document summarization with named entity recognition pipelines that feed validated entities into knowledge graphs for relationship verification. Human-in-the-loop oversight provides final validation for high-stakes documents, while continuous monitoring dashboards track extraction accuracy trends, enabling proactive intervention when fidelity degradation occurs. This comprehensive approach maintains document processing reliability while preserving semantic accuracy across diverse document types and formats.

Conclusions

Managing fidelity risk in AI-powered document processing requires a multi-faceted approach combining technical solutions with robust validation processes. As AI systems continue to evolve, organizations must remain vigilant in monitoring and maintaining data accuracy while implementing appropriate safeguards to ensure reliable information extraction and processing.

Your article helped me a lot, is there any more related content? Thanks!