AI Evaluation: Best Practices for Testing and Evaluating Gen AI Tools

Estimated reading time: 10 minutes

Key Takeaways

-

- Understanding the importance of AI Evaluation in ensuring effective and safe Gen AI tools.

-

- Exploring key evaluation metrics and performance measurement strategies.

-

- Identifying challenges in Gen AI evaluation and proposing solutions.

-

- Learning from real-world applications and case studies.

- Anticipating future trends in Gen AI evaluation.

Table of Contents

Introduction

Generative AI tools, often referred to as Gen AI, are dramatically reshaping various industries, from content creation to healthcare. These systems are capable of generating new content, such as text, images, and code. However, their effectiveness and reliability are deeply contingent on thorough AI Evaluation. This blog post delves into the best practices for testing and evaluating Gen AI tools to ensure they are effective, relevant, and safe, using appropriate Gen AI Evaluation metrics and performance measurements.

Understanding Gen AI Evaluation

What Is Generative AI?

Gen AI systems are capable of creating new content rather than merely analyzing old data. These systems find applications in diverse areas, such as:

-

- Text Generation: Improving chatbots and aiding content creators.

-

- Image Generation: Assisting in design and art creation.

- Code Generation: Helping software development processes. AI Automation in Finance: Revolutionizing Financial Services and Unlocking Innovation

Evaluation is essential for ensuring that Gen AI tools produce high-quality and relevant outputs. Proper evaluation reveals both the strengths and the potential biases of these models.

Setting the Ground for Evaluation Metrics

The Role of Evaluation Metrics

Evaluation metrics serve as objective standards to assess AI model effectiveness. They are crucial for understanding how a Gen AI tool performs over multiple dimensions.

Types of Evaluation Metrics

-

- Quality Metrics:

-

- Coherence: Evaluates logical consistency of generated content.

- Relevance: Assesses how closely the output aligns with the given input or context.

-

- Quality Metrics:

-

- Safety Metrics:

-

- Toxicity: Detects any harmful or offensive content.

- Bias Detection: Identifies prejudiced tendencies in model outputs. The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

-

- Safety Metrics:

- Performance Metrics:

-

- Response Time: Measures how swiftly a model can generate outputs.

- Resource Utilization: Evaluates the computational resources utilized by the model, impacting scalability.

-

Key Gen AI Evaluation Metrics

Generation Quality Metrics

-

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): This metric measures the quality of machine-generated summaries or translations by comparing them to human references [14].

-

- Perplexity: Used for language models, this metric measures how well a probability model predicts a sample. Lower values indicate better performance. Source: Addaxis AI.

- BLEU (Bilingual Evaluation Understudy): Commonly used for text translations, BLEU compares machine-generated to human-written references. Source: Addaxis AI.

Safety and Responsibility Metrics

-

- Toxicity: Evaluates the presence of harmful content to prevent the spread of inappropriate information.

- Bias Detection: Assesses outputs for unfair biases, ensuring ethical standards. The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

Performance Metrics

-

- Response Time: Evaluates the model’s speed, crucial for user satisfaction.

- Resource Utilization: Assesses computational needs, impacting cost and scalability. AI in Banking and Finance: Revolutionizing the Financial Sector

Performance Measurement Strategies

Best Practices for Measuring Gen AI Performance

1. Automated Evaluation

Tools such as Azure AI Foundry facilitate automated and scalable assessments of Gen AI models. This approach ensures efficiency and consistency. Source: Google Cloud.

2. Human-in-the-Loop Evaluation

Combining automated metrics with human insights captures subtleties such as creativity or contextual relevance. Source: DataStax.

3. Benchmarking Against Industry Standards

Comparing Gen AI tools against industry benchmarks helps identify areas for enhancement and competitive differentiation. Source: DataForce. AI in Banking and Finance: Revolutionizing the Financial Sector

4. Continuous Monitoring

Consistent monitoring helps detect and manage performance degradation over time. [13]

Challenges in Gen AI Evaluation

Common Challenges

-

- Subjectivity in Quality Assessment: Elements like creativity or relevance are hard to measure objectively.

-

- Data Limitations: Securing diverse datasets for evaluation is challenging.

-

- Evolving Standards: Rapid advancements in Gen AI technology require continual updates in evaluation criteria.

- Potential Biases: AI models may inherit biases from their training data.

Solutions

-

- Blend qualitative and quantitative assessments.

-

- Regularly refresh and diversify datasets.

- Keep up with industry updates and best practices.

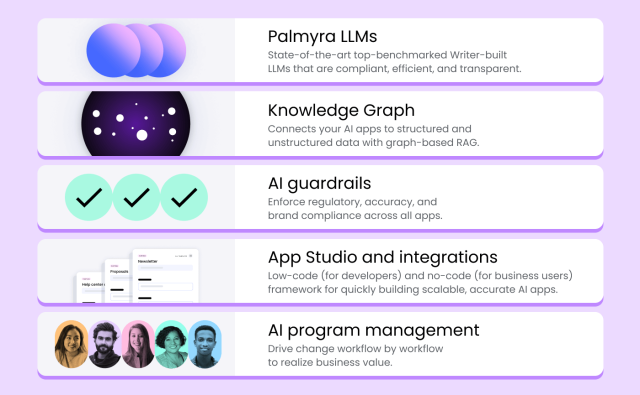

Tools and Techniques for Effective Evaluation

Common Tools for AI Evaluation

Azure AI Foundry

Offers both automated metrics and human-in-the-loop assessments, covering a comprehensive evaluation spectrum. Source: Google Cloud.

FMEval

Provides a set of standardized metrics for assessing quality and responsibility in language models. [17]

OpenAI’s Evaluation Framework

This framework provides diverse metrics to assess the quality, coherence, and relevance of AI outputs. Source: Addaxis AI.

Case Studies and Real-World Applications

Walmart: A Success in AI-Powered Inventory Management

Walmart implemented AI tools for inventory management, enhancing efficiency by 97% due to rigorous evaluation. Customizing evaluation metrics to align with operational goals was key. Source: Psico-Smart.

Mount Sinai Health System: Healthcare Innovation with Gen AI

By reducing hospital readmission rates by 20% through AI evaluation, Mount Sinai showcased the necessity of robust assessment in healthcare. The focus on data quality and ethics were lesson highlights. Source: Psico-Smart.

Lessons Learned

Tailored evaluation leads to better outcomes; continuous assessment results in notable performance improvements.

Future of Gen AI Evaluation

Emerging Trends

AI-Powered Evaluation

Using advanced AI models for Gen AI evaluation enhances scalability and provides context-aware assessments. Source: DataStax.

Ethical AI Evaluation

There is growing emphasis on the ethical impact of Gen AI tools, aligning outputs with societal and regulatory standards. [16] The Transformative Role of AI in Financial Compliance: Enhancing Risk Management and Fraud Prevention

Adaptive Evaluation Frameworks

Frameworks that adapt to new AI capabilities future-proof evaluation strategies. [11]

Evaluation methods must evolve with AI advancements. Continuous education and collaboration with industry bodies are essential.

Conclusion

Effective AI Evaluation is fundamental to maximizing the benefits of Gen AI tools. It ensures reliability, mitigates risks, and enhances user trust. Continuous learning and adaptation to evolving industry standards are crucial for sustained success.

Investing in comprehensive evaluation not only yields competitive advantages but also improves performance outcomes.

Call to Action

We invite you to share your experiences, challenges, or questions about Gen AI evaluation in the comments. For extended learning on Gen AI Evaluation metrics and performance measurement, explore resources from industry leaders like NIST. [16] Generative AI and the Future of Work in America: Transforming Jobs, Productivity, and Skills

Through diligent evaluation practices, your organization can harness the full potential of Gen AI tools.